FOR the Wine Connoisseur

All photos courtesy KK . If any deviations, would put up labels sharing whose copyright it is.

Before I get into all of that, I was curious about Canada and taking the opportunity of debconf happening there in a few months, asked few people what they thought of digital payments, fees and expenses in their country and if plastic cash is indeed used therein.

The first to answer was Tyler McDonald (no idea if he is anyway related to the fast-food chain McDonalds which is a worldwide operation.) This is what he had to say/share

You can use credit / debit cards almost everywhere. Restaurant waiters also usually have wireless credit / debit terminals that they will bring to your table for you to settle your bill.

How much your bank charges depends on your Canadian bank and the banking plan you are on. For instance, on my plan through the Bank Of Montreal, I get (I think) 20 free transactions a month and then after that I m charged $0.50CDN/piece. However, if I go to a Bank Of Montreal ATM and withdraw cash, there is no service fee for that.

There is no service fee for using *credit* cards, only *debit* cards tend to have the fee.

I live in a really rural area so I can t always get to a Bank Of Montreal machine for cash. So what I usually end up doing, is either pay by credit and then pay of the balance right away so I don t have to pay interest, or when I do use my bank card to pay for something, I ask if I can get cash back as well.

Yes, Canada converted to plastic notes a few years ago. We ve also eliminated the penny. For cashless transactions, you pay the exact amount billed. If you re paying somebody in cash, it is rounded up or down to the nearest 5 cents. And for $1 or $2, instead of notes, we ve moved over to coins.

I personally like the plastic notes. They re smoother and feel more durable than the paper notes. I ve had one go through a laundry load by accident and it came out the other side fine.

Another gentleman responded with slightly more information which probably would interest travellers from around the world, not just Indians

Quebec has its own interbank system called Interac (https://interac.ca/en/about/our-company.html). Quebec is a very proud and independent region and for many historical reasons they want to stand on their own, which is why they support their local systems. Some vendors will support only Interac for debit card transactions (at least this was the case when I stayed there the beginning of this decade, it might have changed a bit). *Most* vendors (including supermarkets like Provigo, Metro, etc) will accept major credit and debit cards, although MasterCard isn t accepted as widely there as Visa is. So, if you have one of both, load up your Visa card instead of your MasterCard or get a prepaid Visa card from your bank. They support chip cards everywhere so don t worry about that. If you have a 5 digit pin on any of your cards and a vendor asks you for a 4 digit pin, it will work 90%+ of the times if you just enter the first 4 digits, but it s usually a good idea to go change your pin to a 4 digit just to be safe.

From the Indian perspective all of the above fits pretty neat as we also have Pin and Chip cards (domestically though most ATMs still use the magnetic strip and is suspected that the POS terminals aren t any better.) That would be a whole different story so probably left for another day.

I do like the bit about pocketing the change tip. As far as number of free transactions go, it was pretty

limited in India for few years before the demonetization happening now.

Few years before, I do remember doing as many transactions on the ATM as I please but then ATM s have seen a downward spiral in terms of technology upgradation, maintenance etc. There is no penalty to the bank if the ATM is out-of-order. If there was significant penalty then we probably would have seen banks taking more care of ATM s. It is a slightly more complex topic hence would take a stab at it some other day.

Do hope though that the terms for ATM usage for bank customers become lenient similar to Canada otherwise it would be difficult for Indians to jump on the digital band-wagon as you cannot function without cheap, user-friendly technology.

The image has been taken from

this fascinating article which appeared in Indian Express couple of days back.

Coming back to the cheese and wine in the evening. I think we started coming back from

Eagle Encounters around 16:30/17:00 hrs Cape Town time. Somehow the ride back was much more faster and we played some Bollywood party music while coming back (all cool). Suddenly remembered that I had to buy some cheese as I hadn t bought any from India. There is quite a bit of a

post where I m trying to know/understand if spices can be smuggled (which much later I learnt I didn t need to but that s a different story altogether), I also had off-list conversations with people about cheese as well but wasn t able to get any good recommendations. Then saw that KK bought

Mysore Pak (apparently she took a chance not declaring it) which while not being exactly cheese fit right into things. In her own words a South Indian ghee sweet fondly nicknamed the blocks of cholesterol and reason #3 for bypass surgery . KK

So with Leonard s help we stopped at a place where it looked like a chain of stores. Each store was selling something. Seeing that, I was immediately transported to Connaught Place, Delhi

The image comes from

http://planetden.com/food/roundabout-world-connaught-place-delhi which attempts to explain Connaught Place. While the article is okish, it lacks soul and not written like a Delhite would write or anybody who has spent a chunk having spent holidays at CP. Another day, another story, sorry.

What I found interesting about the stores while they were next to each other, I also eyed an alcohol shop as well as an Adult/Sex shop. I asked Leonard as to how far we were from UCT and he replied hardly 5 minutes by car and was shocked to see both alcohol and a sex shop. While an alcohol shop some distance away from a college is understandable, there are few and far around Colleges all over India, but adult shops are a rarity.

Unfortunately, none of us have any photos of the place as till that time everybody s phone was dead or just going to be dead and nobody had thought to bring a portable power pack to juice our mobile devices.

A part of me was curious to see what the sex shop would have and look from inside, but as was with younger people didn t think it was appropriate.

All of us except Jaminy and someone else (besides Leonard) decided to stay back, while the rest of us went inside to explore the stores. It took me sometime to make my way to the cheese corner and had no idea which was good and which wasn t. So with no idea of brands therein, the only way to figure out was the pricing. So bought two, one a larger 500 gm cheap piece and a smaller slightly more expensive one just to make sure that the Debian cheese team would be happy.

We did have a mini-adventure as for sometime Jaminy was missing, apparently she went goofing off or went to freshen up or something and we were unable to connect with her as all our phones were dead or dying.

Eventually we came back to UCT, barely freshened up when it was decided by our group to go and give our share of goodies to the cheese and wine party. When I went up to the room to share the cheese, came to know they needed a volunteer for cutting veggies etc.

Having spent years seeing Yan Can Cook

and having practised quite a bit tried to do some fancy decoration and some julian cutting but as we got dull knives and not much time, just did some plain old cutting

The Salads, partly done by me.

I have to share I had a fascinating discussion about cooking in Pressure Cookers. I was under the assumption that everybody knows how to use Pressure Cookers as they are one of the simplest ways to cook food without letting go of all the nutrients. At least, I believe this to be predominant in the Asian subcontinent and even the chinese have similar vessels for cooking.

I use what is called the first generation Pressure Cooker. I have been using a 1.5 l Prestige Pressure Cooker over half a decade, almost used daily without issues.

http://www.amazon.in/Prestige-Nakshatra-Aluminium-Pressure-Cooker/dp/B00CB7U1OU

1.5 Litre Pressure Cooker with gasket and everything.

There are also induction pressure cookers nowadays in the Indian market and this model

https://www.amazon.in/Prestige-Deluxe-Induction-Aluminum-Pressure/dp/B01KZVPNGE/ref=sr_1_2

Best cooker for doing Basmati Biryanis and things like that.

is long-grain, aromatic rice which most families used in very special occasions such as festivals, marriages, anything good and pure is associated with the rice.

I had also shared my lack of knowledge of industrial Microwave Ovens. While I do get most small Microwave Ovens like

these , cooking in industrial ovens I simply have no clue.

Anyways, after that conversation I went back, freshened up a bit and sometime later found myself in the middle of this

Random selection of wine bottles from all over the world.

Also at times found myself in middle of this

CHOCOLATES

I tried quite a few chocolates but the best one I liked (don t remember the name) was a white caramel chocolate which literally melted into my mouth. Got the whole died and went to heaven experience

. Who said gluttony is bad

Or this

French Bread, Wine and chaos

As can be seen the French really enjoy their bread. I do remember a story vaguely (don t remember if it was a children s fairy tale or something) about how the French won a war through their french bread.

Or this

Juices for those who love their health

We also had juices for the teetotaller or who can t handle drinks. Unsurprisingly perhaps, by the end of the session, almost all the different wines were finito while there was still some juices left to go around.

From the Indian perspective, it wasn t at all exciting, there were no brawls, everybody was too civilized and everybody staggered off when they met their quota. As I was in holiday spirit, stayed up late, staggered to my room, blissed out and woke up without any headache.

Pro tip Drink lots and lots and lots of water especially if you are drinking. It flushes out most of the toxins and also helps in not having after-morning headaches. If I m going drinking, I usually drown myself in at least a litre or two of water, even if I had to the bathroom couple of times before going to bed.

All in all, a perfect evening. I was able to connect/talk with some of the gods whom I had wanted to for a long time and they actually listened. Don t remember if I mumbled something or made some sense in small-talk or whatever I did. But as shared, a perfect evening

Filed under:

Miscellenous Tagged:

#ATM usage,

#Canada,

#Cheese and Wine party,

#Cheese shopping,

#Connaught Place Delhi,

#Debconf16,

#Debit card,

#French bread,

#Julian cutting,

#Mysore Pak,

#white caramel chocolate

Here is my monthly update covering what I have been doing in the free software world in September 2017 (

Here is my monthly update covering what I have been doing in the free software world in September 2017 ( I am starting a work with the students of

I am starting a work with the students of

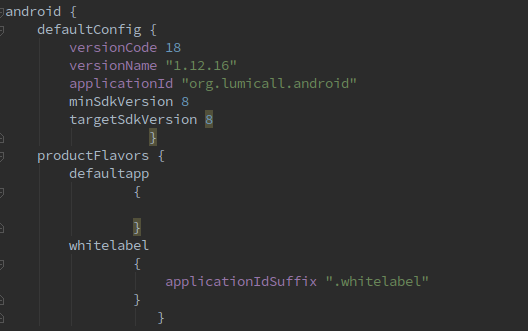

Since the past few weeks I have been researching and working on creating a white label version of Lumicall with my mentors Daniel, Juliana and Bruno.

Lumicall is a free and convenient app for making encrypted phone calls from Android. It uses the SIP protocol to interoperate with other apps and corporate telephone systems. Think of any app that you use to call others using an SIP ID.

Since the past few weeks I have been researching and working on creating a white label version of Lumicall with my mentors Daniel, Juliana and Bruno.

Lumicall is a free and convenient app for making encrypted phone calls from Android. It uses the SIP protocol to interoperate with other apps and corporate telephone systems. Think of any app that you use to call others using an SIP ID.

Note: You have to define at least two flavors to be able to build multiple variants.

Note: You have to define at least two flavors to be able to build multiple variants.

The image has been taken from

The image has been taken from  The image comes from

The image comes from

Or this

Or this